EP 25 : In Memory caching in .NET Core

Read Time : 3 Mins

This week's newsletter is sponsored by Milan Jovanovic

Pragmatic Clean Architecture: Learn how to confidently ship well-architected production-ready apps using Clean Architecture.

Milan used exact principles to build large-scale systems in the past 6 years. Join 600+ students here.

In today’s newsletter, we are going to discuss

What is in-memory caching?

When should we use it?

Pros and cons of it

How to implement it in .NET 6.0

How to handle concurrent access to the memory cache

What is an in-memory cache

A powerful technique that stores data within the memory of your server, making data retrieval lightning-fast.

In-memory caching is a common scaling technique that can enhance performance for frequently needed data.

When should we use it?

We should use it:

We need to access some data frequently that don’t change

While scaling our applications for performance

Ideal for high-traffic applications

Pros and cons of it

Benefits of using In-memory Cache:

Speed

Scalability

Reliability

Cons of using In-memory Cache

1) Volatility: Data stored in memory is lost when the application ends or the server restarts.

2) Cost: More RAM is required which can increase costs.

3) Complexity: Implementing caching mechanisms can be complex and requires careful planning.

How to implement it in .NET 6.0

Add service of memory cache in Program.cs file of your API to enable in memory cache.

Inject IMemoryCache in your desired class where you are going to add caching code

The next step is how to set the cache, these are a few available methods that we can use:

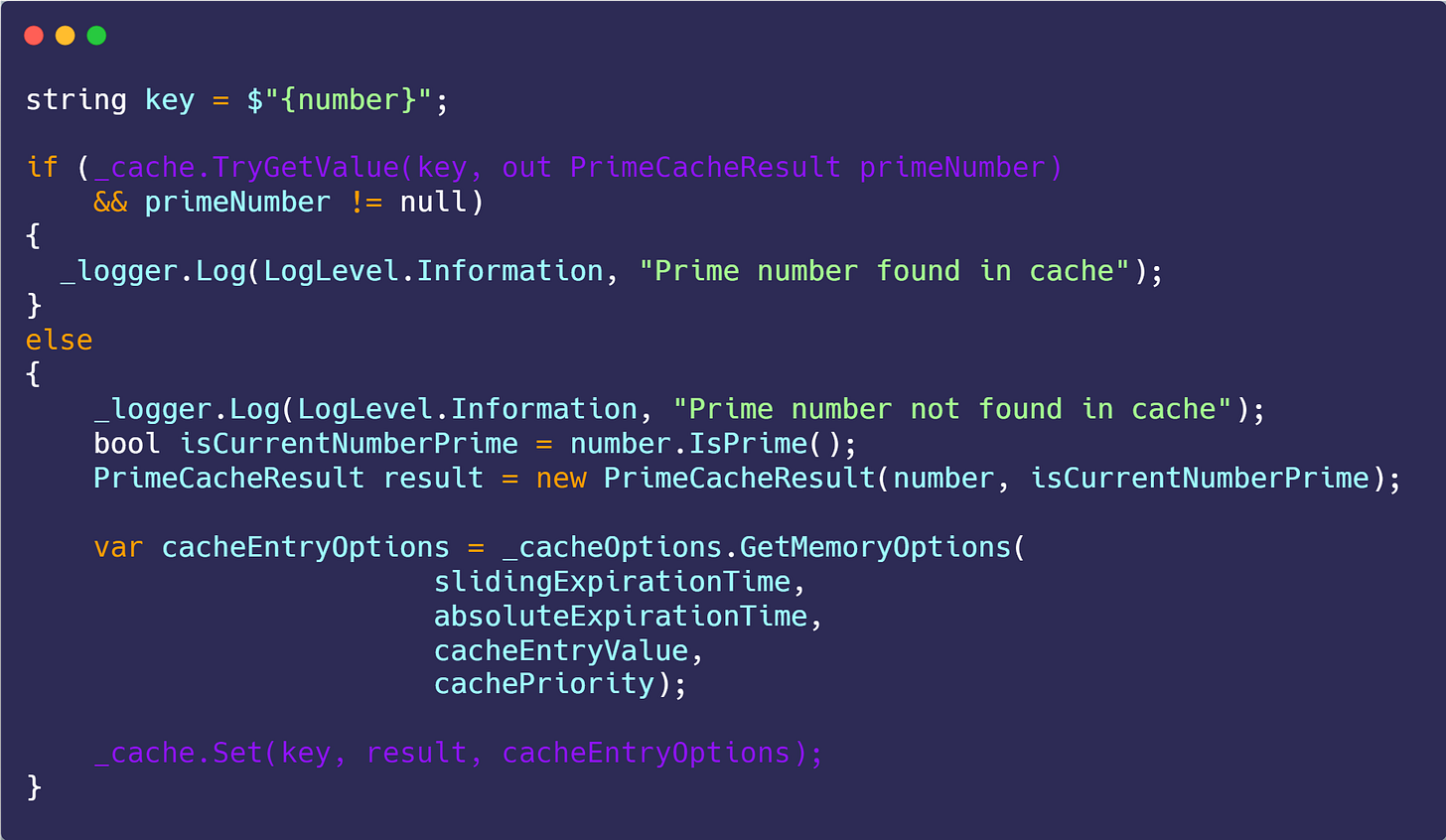

We are going to use TryGetValue and Set for retrieving the cached values and setting it respectively.

I have created a DTO to store the data in the cache.

Let’s see now how can we use it, we need to pass the key while getting the cached results.

Similarly while setting the cache we have to pass the key( which in our case is a number in string format), data object, and cache entry options

What are the Cache Entry Options

These options help us in defining the behavior of our cached data, it has two important things worth mentioning:

Sliding Expiration Time (Timespan)

It represents the max timespan value for which a cached value can be inactive, which later on can be removed. Suppose a cache value can be inactive for 5 minutes

Absolute Expiration Time (Timespan)

It is the duration after which a cache value would be automatically removed, suppose expire cache values after one hour

You can explore other methods of caching for example to remove the cache we can use the Remove method and pass it to the cached object.

How to handle concurrent requests

We can handle concurrent requests either by locking the thread or using semaphores, I prefer to use semaphores because it gives us the facility of setting the maximum number limit for concurrent requests.

We can add it like this:

Cache Scenario with GitHub Code

I have implemented caching for a web API where the user receives the requests and verifies if is it prime or not, meanwhile, it stores the results of already requested numbers. Get the code from my GitHub Repository

Whenever you’re ready, there are 4 ways I can help you

Promote yourself to 6300+ subscribers by sponsoring my Newsletter

Become a Patron and get access to 140+ .NET Questions and Answers

Get a FREE eBook from Gumroad that contains 30 .NET Tips (2500+ downloads)

Book a one-hour 1:1 Session and learn how I went from 0-31K on LinkedIn in 8 months, Book here

Special Offers 📢

Ultimate ASP.NET Core Web API Second Edition - Premium Package

10% off with discount code: 9s6nuez